A Haptic Solution for Measuring Liquid Levels

Duration: 3 Weeks

Team Members: 3 Students + Me

My Role: Ideation, Sketching, AB Testing Protocol

Tools: Arduino, Pen & Paper

OVERVIEW

In our project on emerging technology, we looked into integrating haptics into an existing problem frame. The area that we focused on was using haptics for people who are visually impaired. We knew that there was the possibility to improve their daily lives and alleviate problems that exist for them. We then had to figure out what specific problem we wanted to explore and how we may begin to ease it.

OUR PROCESS

• SECONDARY RESEARCH

• IDEATIONS

• NARROWING SCOPE

• EXPERIENCE/TECHNICAL TESTING

• TAKEAWAYS

• WHAT OUR TEAM LEARNED/NEXT STEP

PROBLEM SPACE & SCOPE

HOW TO IMPROVE AND INNOVATE THE TECHNOLOGY FOR PEOPLE WHO ARE VISUALLY IMPAIRED WITH HAPTICS?

According to Ranker, there are 14 problems that we would have never thought about, one of them was, “It’s challenging to determine how much liquid is in a glass” (Andress). From there we started ideating on items in the kitchen, from cooking to baking and measuring. This went further to realizing that, “what if it is hot,” like the oven, how do they navigate the oven. We watched two youtube videos showing us how they work their way around the oven. It was useful to learn that they first feel the oven when it is cold, learning how to place in the tray and how they need to take it out, as well as what objects might interfere with them while placing the tray on the table. However, we decided eventually to settle on the idea of how much liquid was within a cup at any given time.

One question came to mind: how do they know the liquid level in a thermos cup (which has weight it from the materials used). We read that they place their hand on the lip and then they pour. We took this a step further and began wondering what they do when the liquid is hot, like coffee. “If a blind person forgets to pour their drink mindfully, they might end up with coffee all over the counter (or themselves)” (Andress). Thus the idea of using haptics to help the user who is visually impaired know the volume of liquid in a cup at any given time; specifically a thermos.

SECONDARY RESEARCH

Our team started our project by conducting secondary research on our topic. We didn’t know of anyone who is visually impaired nor did we think it would be ethical to reach out to someone we saw that was visually impaired to do primary research. Therefore, all of our knowledge comes from secondary research.

WHAT IS CURRENTLY OUT THERE?

• WeWalk

- Smart Cane that has ultrasonic sensor that warns the user of overhead obstacles with the use of motor sensors. It also includes smartphone integration for navigation purposes and the cane, through voice, instructs the user where and when to turn.

• Eye See

- Is a helmet developed by college students that helps the visually impaired “see the world”. This is done through describing objects and people to the user and emits a warning sound when the user is too close to a obstacle.

• The Dot

- A wearable watch that gives the user notifications in braille.

IDEATION

Here are two sketches we thought about when trying to come up with ideas on how to use sensors on a cup. We also thought of having a weight sensor at the bottom of the thermos that measures where the water level is based off of the weight of the water. Or the possibility of having an ultrasonic sensor at the top of the thermos that measures the liquid level. However, we wanted to focus on the feedback system for this project

EXPERIENCE/TECHNICAL TESTING

With the sensor that we received to use for this protocol, we quickly realized that we were unable to determine the range of intensity. In the future, we would hope to rebuild this prototype to accurately answer all the questions to the original protocol. We only want to test the placement and variability of the vibration haptics. We would later have the engineers test out how the cup will determine where the water level is, whether through an ultrasonic sensor or a weight sensor.

Usability Testing Goals: Trying to feel the difference in liquid volume with vibrations.

Major Goals/Questions:

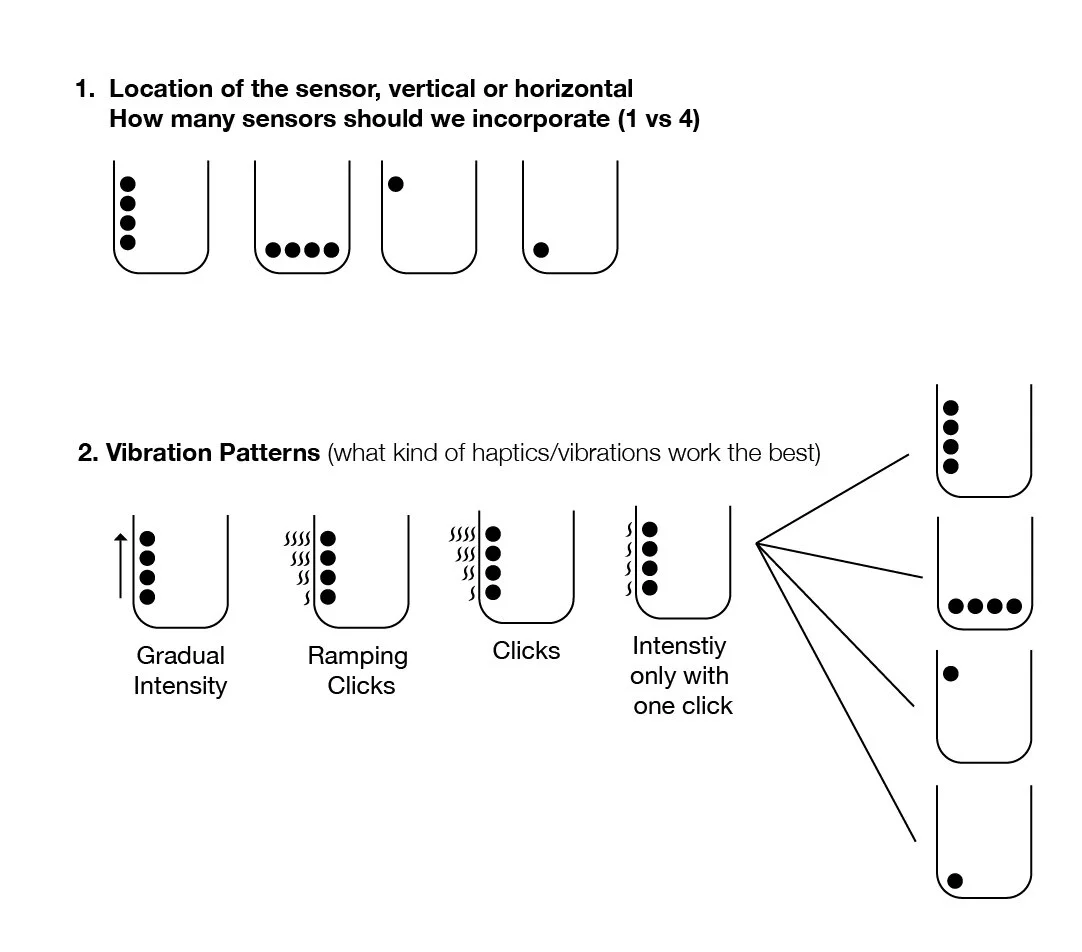

• Determine the importance of the location of the sensor: vertical or horizontal

• How many sensors should we incorporate (1 vs 4)

• What kind of haptics/vibrations work the best (intermittent, ramping clicks, clicks or intensity only with one click)

Task One Goal: Determine the best placement of the haptic sensors, and to try to judge the quantity of haptics needed.

Task Two Goal: Determine the best haptic vibrations to effectively communicate how much liquid is within a thermos.

WHAT OUR TEAM LEARNED/NEXT STEPS

Our team realized that there are many factors we would need to consider for testing and final product. After our testing we thought about whether the sensors would feel different if it were placed inside of the cup or between two layers in the cup, and if the vibrations be just as strong. These were questions we did not have time to test though, and would be prevalent in future proceedings. It would have also been useful to have interviewed or tested users who are visually impaired since all of our insight was gained through secondary research methods. Having an actual user could potentially give us unique feedback or answers to questions we had along our process.

There were many things we did consider but since it includes additional research and time, we decided these would be useful to think about in the next phase if we were to continue with the project.

• Primary research with people who are visually impaired

• Charger for the thermos (if it needs it or not)

• What material would be used

• Would it be dishwasher safe?

• The cost of the thermos

• Other vibration patterns

• Placement of the sensors, inside two layers or on the outside